Infrared light-based technologies drive interactive vehicles forward (MAGAZINE)

How long before our cars can fully drive themselves? That’s probably still at least a decade away. But the onset of true vehicle autonomy is already having a profound impact on car interiors. Why? Because drivers will no longer be drivers — they will be passengers with a fundamentally different set of expectations about what they can do while in the vehicle.

If you’re not actually driving your car, you’ll be doing all sorts of other things — such as working, watching videos, or perhaps even creating a piece of artwork during your morning commute. Like in our homes, features such as coziness and interior design will play a critical role. That means there will be increased demand for superior comfort — as well as for intuitive interactions with the vehicle. Infrared light plays an essential role in meeting these demands.

As such, a top priority for vehicle manufacturers going forward will be to adapt their vehicle lineups to meet new requirements from interior lighting to everyday interactions with the vehicle. What will these new interactions look like? Imagine emerging from the supermarket with heavy grocery bags in each hand. Fortunately, your car notices you from 20 ft away and opens the trunk automatically so you can load your groceries quickly and easily. While this may sound futuristic, it is actually available in certain vehicles today.

The technology is based on an application commonly used in many smartphones and tablets — biometric identification. This technology analyzes the unique features of the user via iris scans and facial recognition. Iris scans, for instance, detect the unique pattern of a user’s iris, while facial recognition systems focus on certain two- or three-dimensional characteristics, such as the distance between the eyes. Based on that analysis, the system is then able to accurately identify the user.

Biometrics promises to usher in a vast number of applications in the vehicle interior beyond unlocking doors or opening the trunk, such as setting the desired seat position, turning on different colored lights, and automatically playing the driver’s favorite music. But this is just the beginning. The introduction of 3-D facial recognition is opening the door to many other applications beyond basic user identification.

All eyes on the driver

Drivers still don’t have the luxury of being able to ignore the road. They must pay steadfast attention when behind the wheel. However, the advent of driver assistance systems is helping to reduce accidents and save lives. A 2019 study by the Federal Statistical Office of Germany concluded that human error was responsible for nearly 90% of car accidents in that country. So it’s not surprising that the European Union (EU) has made driver assistance systems mandatory in some vehicles in Europe starting in 2022. Starting in 2026, this regulation will cover all new vehicles in the European market. These kinds of requirements are also gaining steam in the US.

With driver monitoring systems, a driver’s face is illuminated with infrared light that is invisible to the naked eye. A special CMOS camera, usually with a resolution of 1 to 2 megapixels, records about 30 or 60 images per second, depending on the model. The recorded images are evaluated by a downstream system that analyzes everything from the blink frequency of the driver to the direction of their gaze (Fig. 1). The system uses this data to draw conclusions about driver distraction or increasing drowsiness. Then it alerts the driver with the appropriate warning signals to recommend a break.

The field of view (FoV) changes depending on where the driver monitoring system is located. FoV is the rectangular area that the camera-based system covers. As a result, the individual components need to match the FoV. For the light source, this means illuminating the designated area as brightly and uniformly as possible. Driver monitoring systems are commonly designed for an FoV of 35° to 45° vertically and 45° to 60° horizontally.

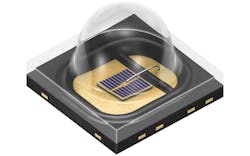

It’s also essential for the light sources to constantly illuminate the area with around 4–5W/m². This value is calculated based on the average distance of the emitter to the driver, which is typically between 40 and 110 cm. Light sources with a wavelength of 850 nm can cause the so-called “red glow” effect, which the human eye perceives as red flickering. At 940 nm, the red glow is virtually eliminated. Additionally, the use of a camera with an integration time between 0.5 and 4 ms is recommended. The Oslon Black IRED (infrared LED) family of emitters is designed to meet the requirements of driver monitoring systems with high optical output, various beam angles, and compact dimensions for space-constrained automotive applications (Fig. 2).

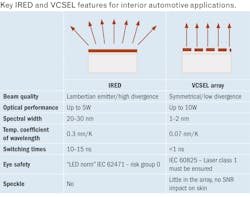

Vertical cavity surface-emitting lasers (VCSELs) also offer a distinct advantage for driver monitoring systems. They combine the key characteristics of two illumination technologies: the simple packaging of an infrared LED with the spectral width and speed of a laser. Because of the narrow spectral width of less than 3 nm, the red-glow effect described previously is even lower compared to an IRED at 940 nm.

Using a small band-pass filter that fits the narrow spectrum of a VCSEL, system manufacturers can benefit from a higher immunity to sunlight and overall higher signal-to-noise (SNR) ratios with VCSELs. Additionally, VCSELs have an optimized radiation characteristic for camera shots that compensates for the camera’s vignetting, removing the need for a secondary reflector.

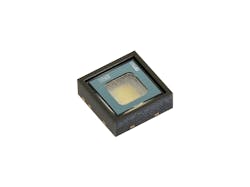

An AECQ-102 qualified VCSEL flood illuminator like the Tara2000-AUT provides uniform illumination, multiple optics options, and a compact footprint to replace multiple lower-power emitters, thereby simplifying the number of components and space required for driver monitoring and in-cabin monitoring systems (Fig. 3).

No item — or lifeform — left behind

Infrared light can also help identify the seating position of the vehicle’s occupants and prevent valuables from being accidentally left behind in the vehicle — or even more critical, alert drivers that they still have occupants in the automobile. Imagine renting a car and leaving your purse in the backseat after returning it. The in-cabin monitoring system detects your forgotten item and alerts you via a text message on your smartphone. What’s more, these systems can help prevent babies and dogs from being locked in vehicles by accident.

To generate reliable and high-quality data that enables these solutions, a sophisticated 3-D time-of-flight (ToF) application is required. In-cabin monitoring solutions based on ToF usually consist of a photonic mixer device (PMD) camera, a VCSEL as the infrared light source, and a special detector. The VCSEL emits light from hundreds of individual apertures at right angles to the chip surface. Specialized optics bundle the light into the FoV. In simple terms, the VCSEL sends a certain number of parallel light beams into the environment. As soon as one of these beams encounters an object in the vehicle — such as the headrest or child’s car seat — it is reflected and sensed by the detector. The time it takes for the light beam to get from the light source to the object and back again helps calculate the distance.

Together with the data provided by the other apertures, a high-resolution 3-D image is produced with corresponding depth information. Downstream software can then use the measurement results to determine which object is involved. Depending on the installation location, different FoVs are required for in-cabin monitoring systems — typically 110° to 160° horizontally and 80° to 100° vertically. These systems are usually integrated centrally in the ceiling of the vehicle to cover as much of the interior as possible.

The magic of gesture control

Gesture control solutions are the next big thing in the auto industry, and infrared LEDs and VCSELs can make them happen. Simpler gesture control systems typically rely on discrete components such as a separate emitter and detector. With the help of these solutions, even basic gestures and motion sequences can be detected. To ensure that the light from an IRED arrives uniformly in the defined FoV, it is necessary to bundle it with the aid of secondary optics.

However, the additional optics require a slightly larger space. IREDs designed in a smaller package (1.6×1.6 mm), such as the Oslon Piccolo, can output 1.15W at 1A and can be switched at around 30 MHz for simple gesture recognition systems.

The properties of the VCSEL, such as the extremely fast switching times of up to 100 MHz, enable ToF-based, high-end solutions that can recognize and process significantly more complex movements and gestures in three dimensions. In this context, the ability to generate depth-related data is essential. Typically, gesture recognition systems are integrated into the housing of a display, as the focus is on interaction with the vehicle’s main control unit. The nearby table compares some key parameters of IREDs and VCSELs that are used in the described interior automotive monitoring and control systems.

The road ahead

In a few short years, car cabins will look fundamentally different than they do today. It will be exciting to see which applications and solutions rise to the forefront. Broad acceptance by end users will play a key role, but it is ultimately up to system manufacturers to decide which technologies best fit their architecture.

It’s clear that systems integrating infrared light sources, sensors, and other components will play a starring role in the automobiles of tomorrow, but the requirements regarding the complexity of these systems will vary. Companies that have a unique product portfolio with all key emitter technologies and power classes will likely emerge as leaders in the automotive supply and design chain.

Get to know our expert

FIRAT SARIALTUN is In-Cabin Sensing Segment manager at optical sensor and light solutions manufacturer ams Osram, responsible for the optical infrared illumination portfolio based on IRED and VCSEL technologies. Sarialtun has extensive experience in the automotive electronics sector, having worked with Texas Instruments and Sensirion. He has a bachelor of science degree in electrical-electronics engineering from Boğaziçi Üniversitesi (Bosphorus University) in Turkey, and a master of science degree in electrical, electronics and communications engineering from Technische Universität München (Technical University of Munich) in Germany.